I know this is a long post. If you can't commit, I have broken this post up into six smaller ones starting with this one.

As you read this post, keep one thing in mind: while the predicted timeframe may be off by a few years (or decades) in some cases, all of these things are likely to happen. We are at the precipice of an amazing time in human history!

Earth has received a message from an alien intellect alerting us to their arrival in 30–50 years. There are promises of great advances and technologies — some of which we cannot even imagine. There is also the threat of being dominated, enslaved — or worse — annihilated.

This is not a hypothetical fiction or fantasy. This is the actual state of the world today. We are 30 to 50 years away from the arrival of an intelligence that will dwarf our own, by a magnitude greater than human intellect towers over that of ants. Many of us are even devoting our lives to welcoming these intelligent entities.

But really our entire planet should unify in purpose—set aside pettiness and work together. We should earnestly prepare for this arrival and all of the astonishing and terrifying knowledge and technologies it will bring. We should create a plan to endear ourselves to these strange new forces. We should try to pass our values to them and share our goals with them to stave off the myriad threats these entities will present while ushering in wondrous advances, prosperity, and even immortality.¹superintelligence

Despite the fact that we are actively developing these new alien² intellects, we know nearly as little about these artificial intelligences as we would about an extraterrestrial visitor from another part of the cosmos. All we know for sure is that they are coming. The fun (and scary) part is speculating on what sorts of things they will bring with them.

Like you, I’ve been hearing about artificial intelligence (AI) for the better part of my life. And like you, I conjured up ideas of androids, robots, and a dystopian future. An emerging group of researchers is proselytizing, however, that we must not wait for the future to unfold like passive onlookers, but instead, we need to actively work towards creating the outcomes we want to see.

I, for one, want to see a fair justice system, safe transportation where millions don’t die each year, cities and buildings designed to truly accommodate our needs, an intelligent use of natural resources and efficient production of energy, the restoration of our wild areas — including reviving some extinct creatures, once again increasing biodiversity and repairing the environmental damage we’ve done, ending scarcity and the requirement of work, bringing about a new renaissance of creativity and innovation unlike anything the world has ever seen, the elimination of all ailments and disease, the eradication of suffering, and — most epically — an end to death itself.

These outcomes will all be possible if we succeed in developing artificial superintelligence and, more importantly, align its goals with our own.

This is a huge topic and I won’t cover nearly everything in this post. I hope to motivate readers to think about this topic, learn more, and have many conversations about this with their families and friends. In this post I’ll seek to answer these three questions:

- How probable is it that we’ll develop AI that is smarter than humans?

- When might we develop such an AI?

- What impact will such development have on humanity?

I’m writing about artificial intelligence but that is too broad and needs to be further defined. We should start with what I mean by intelligence.

Intelligence — Intelligence is the ability to accomplish complex goals.

I’ve come to use this definition put forth by Max Tegmark in his book Life 3.0: Being Human in the Age of Artificial Intelligence. It is both broad enough to include those activities shared by human and non-human animals as well as by computers and not so broad as to get into questions of consciousness or sentience³. Intelligence cannot be measured using one criteria or scored using a single metric like I.Q. because intelligence is not a single dimension. In the Wired article The Myth of a Superhuman AI, Kevin Kelly states “‘smarter than humans’ is a meaningless concept. Humans do not have general purpose minds, and neither will AIs.” There are cognitive activities at which certain animals perform better than us and some that machines do better than us. This is a good reminder that evolution isn’t a ladder and we’re not at the top of it. Evolution creates adaptations that are best suited for particular circumstances.

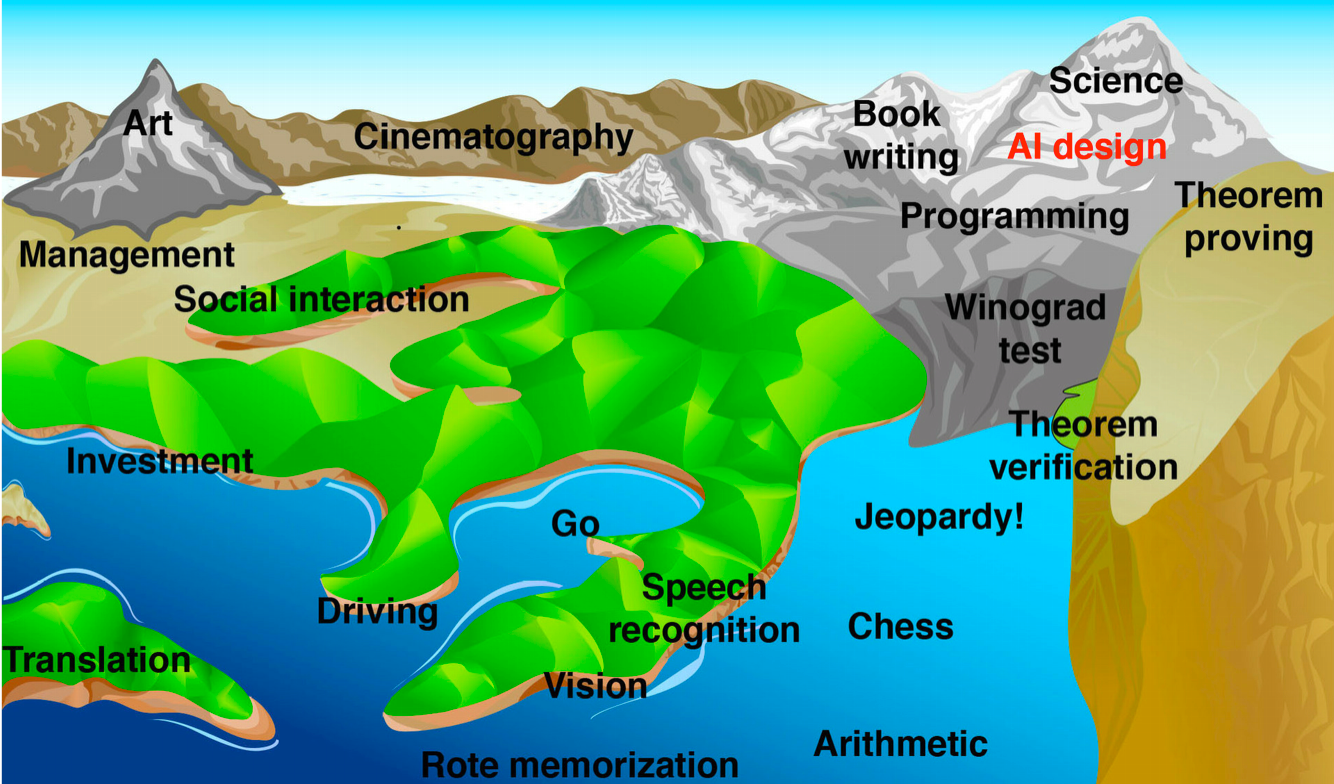

Artificial Narrow Intelligence (ANI) — Intelligence that is specialized in accomplishing a single or narrow goal.

This is the type of AI we are used to today. Google Search, Amazon Alexa, Apple Siri, nearly all airline ticketing systems, and countless additional products and services are using AI to identify people and objects in photos, translate language (written and spoken), master games (chess, Jeopardy, Go, and videogames), drive cars, and more. We come into contact with this type of AI every day and, for the most part, it doesn’t seem very magical. For the most part, these sorts of AI can only do one thing well and they have emerged slowly during the past few decades. Many of these now seem mundane.

MarI/O is made of neural networks & genetic algorithms that have learned to kick butt at Super Mario World.

Artificial General Intelligence (AGI) — Intelligence that is as capable as a human in accomplishing goals across a wide spectrum of circumstances.

You and I excel at various things, we can recognize patterns, combine competencies, solve problems, create art, and learn skills we don’t yet have. We use logic, creativity, empathy, and recall — each requiring a different sort of intelligence. We take this diversity and breadth of intelligence for granted but creating this type of intelligence artificially is the great challenge of our time and is what most people in the field of AI research are trying to accomplish — creating a computer that is as smart as us across many different dimensions — and crucially — one that can teach itself new things.

There’ve been many tests derived in order to ascertain when machine intelligences “become as smart as humans.” These range from the well-known Turing Test to the absurd Coffee Test. They all portend to assess a general capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, and learn quickly from experience. I’m not sure, however, that machines won’t be supremely impressive well short of passing these tests. I imagine stringing together hundreds or thousands of disparate ANI’s into something far more capable than any human in many ways. At that point, it may very well appear to us, to be super-human…

Artificial Super Intelligence (ASI) — Intelligence that is better than any human at accomplishing any goal across virtually any situation.

This is most often how people describe computers that are much smarter than humans across a wide range of intelligences. Some say all intelligences, but I’m not sure that is the threshold that need be passed. Of course, computers are already superhuman in some arenas. I’ve mentioned some specific games in which humans can no longer compete, but computers have been better than humans at other things for decades — things like mathematical computation, financial market strategy, memorization, and memory recall come to mind. But soon more and more of those competencies at which humans excel — things involving language translation, visual acuity, perception, and creativity — will be conquered by computers as the following illustration shows.

1. How probable is it that we’ll develop AI that is smarter than humans?

Of the three questions, this is the least fun but also, perhaps, the easiest to answer. At a recent conference comprised of the people most involved in working in the field of artificial intelligence, participants were asked when human-level intelligence would be achieved and less than 2% of the respondents believed the answer to be never. This poll asked specifically about AGI and not ASI, however, many people (including me) believe that the difficult task is developing a sufficiently advanced AI that can teach itself new things (AGI) and that a super-intelligent version will emerge quite quickly thereafter. Some think this emergence could take years or decades once we arrive at AGI. Others warn that it could be just a matter of hours after that first AGI is developed due to the speed of recursive self-improvement. It is this capacity for recursive self-improvement that many software engineers are indeed hoping to instill in their programs to speed development. It is also this ability that could very easily see our world go from no human-level AI’s to one to the first super-human AI very rapidly.

While there are still a few skeptics who believe we will never develop human-level intelligence or that it won’t be for hundreds of years, a growing consensus among those closest to these efforts⁴ believe it will happen, and soon…

2. When might we develop such an AI?

Here’s where we are right now:

We’re at this point in time, just before a massive spike in human progress. This has occurred before with the industrial revolution and the advent of the Internet but this spike will dwarf those. Due to the Law of Accelerating Returns — advancement as an evolutionary process that speeds up exponentially over time—life in 2040 — just a scant 23 years away — may be as different from today as life today is from medieval times. It may be so different that we can no more picture it than could a serf from the Middle Ages understand the technologies used to create a website or a cellphone.

When we consider the timeline and effects of superintelligent AI systems and overlay them on top of our expected lifetimes we see the enormous wondrous and scary impacts that we — you and I — can expect. Let’s check in on those same AI researchers for their expert opinions. A large majority (>68%) believed we would develop AGI by 2050 with 43% of respondents believing this would occur by 2030.

So in 13 to 33 years, there is believed to be a good chance that we will have developed a computer program that is at least as intelligent as us. This will include many different types of intelligence including the ability to learn on its own.

It seems hard to fathom it going this fast. It doesn’t feel like things will advance this quickly. If you’re like me, you read about new tech daily and buy the latest and greatest gadgets and gizmos. The pace of technological advancement is slooooow, right? But our perception is flawed.

One of the most optimistic and vocal proponents of this new era being ushered in is Ray Kurzweil. He came up with and based many of his predictions on the aforementioned Law of Accelerating Returns and expects AGI to arrive by 2029. Again I turn to Wait But Why:

The average rate of advancement between 1985 and 2015 was higher than the rate between 1955 and 1985 — because the former was a more advanced world — so much more change happened in the most recent 30 years than in the prior 30.”

"Kurzweil “believes another 20th century’s worth of progress happened between 2000 and 2014 and that another 20th century’s worth of progress will happen by 2021, in only seven years. A couple decades later, he believes a 20th century’s worth of progress will happen multiple times in the same year, and even later, in less than one month.” And “all in all, that the 21st century will achieve 1,000 times the progress of the 20th century.”

This is due to the power of exponential growth versus the linear growth of which we often think.⁵

Probably closer to 2030 to 2040 imo. 2060 would be a linear extrapolation, but progress is exponential. https://t.co/e6gyOVcMZG

— Elon Musk (@elonmusk) June 6, 2017

3. What impact will such development have on humanity?

The remainder of this post will lay out what life could look like once we are no longer the most (generally) intelligent being on the planet. We’ll touch on both the good and bad outcomes (for humans) and describe some ways in which we could hope to nudge things toward the better outcomes.

It will likely be impossible to know exactly “what” and “how” these AIs are thinking. My guess is they will have advanced through a combination of directed programming and training from humans as well as recursive self-development efforts of their own. Once they can efficiently self-improve, we will quickly lose our ability to know what the hell is going on within them or comprehend what they are doing. It’s not just that an ASI will think a million⁶ times faster than us, it’s that the quality of the thinking will be that much better.

Here’s an example from Wait But Why that illustrates the gulf between human cognition and that of chimps.

“What makes humans so much more intellectually capable than chimps isn’t a difference in thinking speed — it’s that human brains contain a number of sophisticated cognitive modules that enable things like complex linguistic representations or long-term planning or abstract reasoning, that chimps’ brains do not. Speeding up a chimp’s brain by thousands of times wouldn’t bring him to our level — even with a decade’s time, he wouldn’t be able to figure out how to use a set of custom tools to assemble an intricate model, something a human could knock out in a few hours. There are worlds of human cognitive function a chimp will simply never be capable of, no matter how much time he spends trying.

But it’s not just that a chimp can’t do what we do, it’s that his brain is unable to grasp that those worlds even exist — a chimp can become familiar with what a human is and what a skyscraper is, but he’ll never be able to understand that the skyscraper was built by humans. In his world, anything that huge is part of nature, period, and not only is it beyond him to build a skyscraper, it’s beyond him to realize that anyone can build a skyscraper. That’s the result of a small difference in intelligence quality.

And in the scheme of the intelligence range we’re talking about today, or even the much smaller range among biological creatures, the chimp-to-human quality intelligence gap is tiny.”

And it is tiny. We are pretty close in intellect to chimps — no offense. In fact, there are intellectual tasks that chimps canperform better than us.

Chimps can recognize and recall certain patterns far better than we can. Don’t be so full of yourself.

So let’s say we now imagine a superintelligent machine that represents a cognitive gap equal to the one between us and the chimp. But in this example, we’re the chimps and the machine is trying to explain things to us that we cannot comprehend. Even these small gaps in level present huge chasms in understanding.

Now let’s examine the much larger gap between us and ants. An ant building an anthill on the side of a highway has no idea what that highway is. They cannot possibly comprehend how we built it, for what we use it, what benefits it bestows upon us, or even who we are. Attempting to explain any of this to the ant is a preposterous idea for good reason. Even if we somehow could transmit our language or thoughts to the ants, they are simply not capable of comprehending any of it.

A machine with the sort of smarts we’re talking about would be about as far away from us as we are to ants. The gap would be huge. We could not comprehend what the machine was doing and it would be pointless for the machine to attempt to explain it to us. Tim Urban uses a staircase metaphor to explain this.

In an intelligence explosion — where the smarter a machine gets, the quicker it’s able to increase its own intelligence, until it begins to soar upwards — a machine might take years to rise from the chimp step to the one above it, but perhaps only hours to jump up a step once it’s on the dark green step two above us, and by the time it’s ten steps above us, it might be jumping up in four-step leaps every second that goes by. Which is why we need to realize that it’s distinctly possible that very shortly after the big news story about the first machine reaching human-level AGI, we might be facing the reality of coexisting on the Earth with something that’s here on the staircase (or maybe a million times higher).”

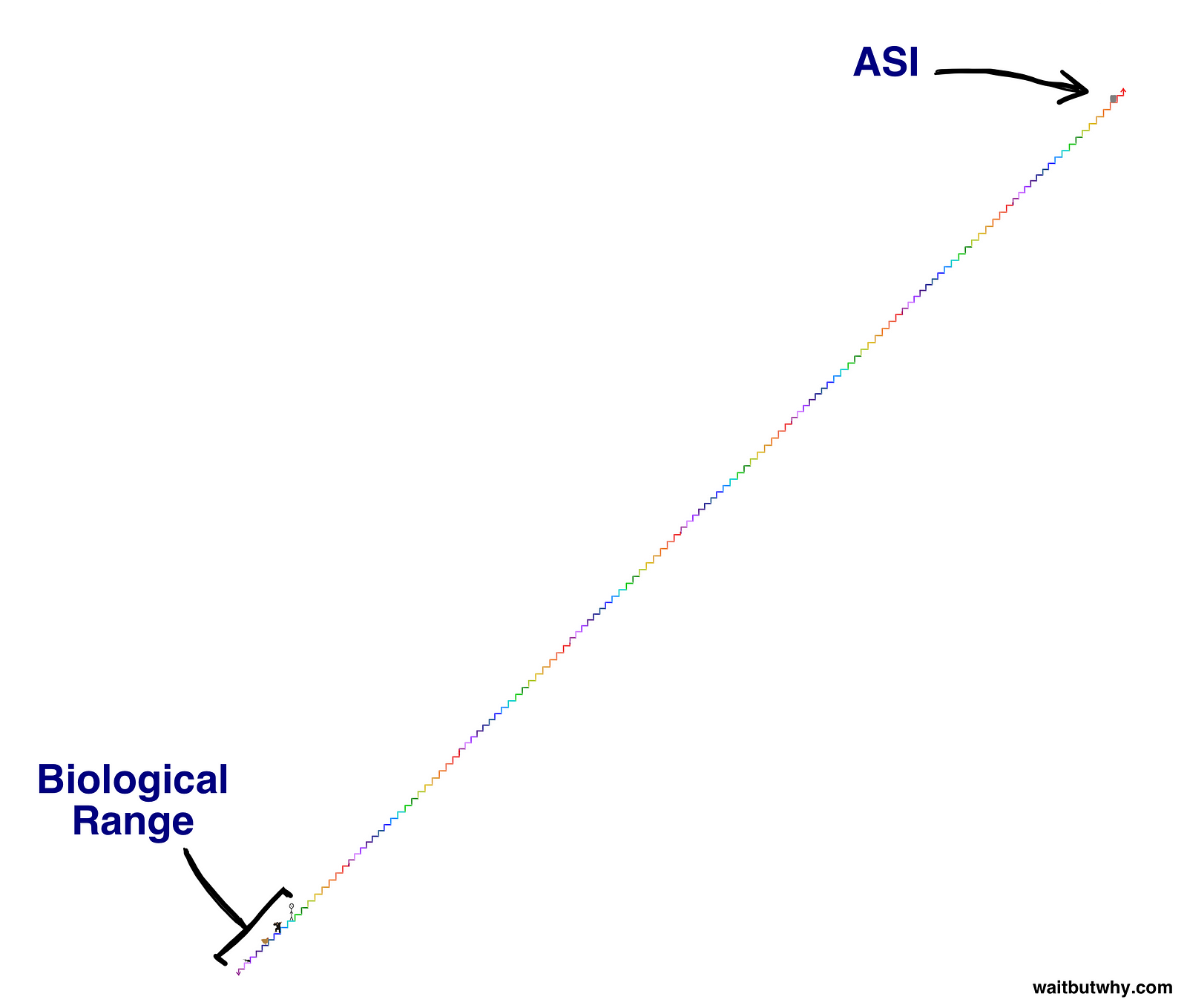

The biological range of intelligence on the left, and an ASI well above that range on the right.

And since we just established that it’s a hopeless activity to try to understand the power of a machine only two steps above us, let’s very concretely state once and for all that there is no way to know what ASI will do or what the consequences will be for us. Anyone who pretends otherwise doesn’t understand what superintelligence means.”

Ok, enough of making ourselves feel inferior. All of this is a way of stating that the sorts of things this uber-powerful, intelligent machine will be able to do, will be amazing. Let’s get into some of these things.

The Good

It cannot be overstated that so many of the problems plaguing our world today are very solvable. Given today’s abundant wealth and advanced technologies, we need not have any hunger, pollution, energy needs, traffic deaths, political inefficiency, terrorism, or wars. If we better distributed and deployed the innovations and resources we have right now, these would all be things of the past. We’ve mapped the human genome and are manipulating DNA, we’re learning more ways to deploy nanotechnology, and we could colonize the moon, and probably Mars in five years if we had the will. There is no end to the things we can do — all with our puny monkey brains. A smarter entity could greatly hasten these advancements and usher in many more undiscovered and unthinkable (for us) ones.

Everything we make, indeed, anything that has ever been made, is made up of atoms. And there aren’t that many of them — hydrogen and helium make up 98% of the universe’s matter⁷ and 98.8% of Earth’s mass comes from just 8 elements: iron, oxygen, silicon, magnesium, sulfur, nickel, calcium, and aluminum. We are getting closer and closer to being able to manipulate the smallest known bits of our universe. For example:

- Genetics — Scientists have now successfully edited a human embryo to “delete” a gene linked to heart conditions. Additionally, genetic modifications to neurons in the brain show that the use of genetic editing is not restricted to growing cells but mature cells as well.⁸

- Quantum Computing — Advances at Google, IBM, Intel, Microsoft, and several research groups indicate that computers with previously unimaginable power are finally within reach.⁹

- Nanotechnology — Researchers have found ways to use nanochip technology to modify skin cells into other functional cells simply by placing a device with an electrical field on a person’s skin.¹⁰

Once we appropriate nanotechnology, the next step will be manipulating individual atoms (only one order of magnitude smaller). It stands to reason, that once we can break things down to their smallest elements and build them back up — moving individual atoms or molecules around — we will be able to build, literally, anything.

What can we* achieve with the help of a superhuman intellect? Disease, poverty, environmental destruction, scarcity, and unnecessary suffering of all kinds could be eliminated by a superintelligence equipped with advanced nanotechnology.¹¹

* using the “royal we” here as the AI will actually be doing it.

Furthermore, “a superintelligence could give us indefinite lifespan, either by stopping and reversing the aging process through the use of nanomedicine, or by offering us the option to upload ourselves.” says Nick Bostrom, author of Superintelligence: Paths, Dangers, Strategies¹²

Here’s another excerpt from Tim Urban’s post about the AI Revolution:

What AI Could Do For Us?

Armed with superintelligence and all the technology superintelligence would know how to create, ASI would likely be able to solve every problem in humanity. Global warming? ASI could first halt CO2 emissions by coming up with much better ways to generate energy that had nothing to do with fossil fuels. Then it could create some innovative way to begin to remove excess CO2 from the atmosphere. Cancer and other diseases? No problem for ASI — health and medicine would be revolutionized beyond imagination. World hunger? ASI could use things like nanotech to build meat from scratch that would be molecularly identical to real meat — in other words, it would be real meat. Nanotech could turn a pile of garbage into a huge vat of fresh meat or other food (which wouldn’t have to have its normal shape — picture a giant cube of apple) — and distribute all this food around the world using ultra-advanced transportation. Of course, this would also be great for animals, who wouldn’t have to get killed by humans much anymore, and ASI could do lots of other things to save endangered species or even bring back extinct species through work with preserved DNA. ASI could even solve our most complex macro issues — our debates over how economies should be run and how world trade is best facilitated, even our haziest grapplings in philosophy or ethics — would all be painfully obvious to ASI.

But there’s one thing ASI could do for us that is so tantalizing, reading about it has altered everything I thought I knew about everything:

ASI could allow us to conquer our mortality.

Now that we’ve come to believe it possible that death itself could be eliminated it seems a bit superfluous to enumerate all the ways a sufficiently advanced AI could transform modern life by reshaping transportation, legal systems, governments, health, science, finance, and the military, so I think it best to leave those topics for individual future posts.

So far these alien overlords don’t seem half bad. If they bring with them all of these things that help us finally clean up our acts and maybe immortality to boot? I’ll take it. Then again, how can we be sure they will want to help us?

The Bad

These alien intelligences could also bring with them some pretty terrible things, for us. First, though, there are likely some consequences that will arrive prior to the AGI or ASI. We’re already seeing some of these: automated weapons systems, increased surveillance activities, loss of privacy and anonymity. These are things that humans are directing machine learning, artificial intelligence, and robotics to do.

The motivations for these things are pretty clear — safety and security, strategic advantage, economic gains — because they are the same sort of things that have been motivating humans for millennia. While we may not agree with the tactics, we recognize why someone may use them.

This won’t be the case with superintelligent AI systems. There won’t likely be malice when we are eliminated. It just may be the most logical or efficient course of action. Referring back to the ants; we don’t have malice when we wipe out a colony of ants to build a new highway. We simply don’t think of the consequences for the ants because they don’t warrant that type of concern. Which brings us to the largest fear that those involved in AI research have: that we are bringing about our extinction.

Can’t we just pull the plug? Don’t they just do what we program them to do? They wouldn’t want to hurt us, would they? Who would be in charge of an ASI? All these questions point out the big problem with developing AGI and, by proxy, ASI: we simply don’t know what life will be like after ASI arrives. We’re creating something that could change nearly everything but we really do not know in what ways.

There are four main scenarios where things could go very wrong for us:

- A self-improving, autonomous weapons system “breaks out”.

- Various groups will race to develop the first ASI and use it to gain advantage and control.

- An ASI itself will turn malicious and seek to destroy us.

- We don’t align the goals of the ASI with our own.

Now let’s look at each of these in turn and determine just which we should concern ourselves with the most.

- A self-improving, autonomous weapons system “breaks out”.

“[Lethal autonomous robots] have been called the third revolution of warfare after gunpowder and nuclear weapons,” says Bonnie Docherty of Harvard Law School. “They would completely alter the way wars are fought in ways we probably can’t even imagine.” And that “killer robots could start an arms race and also obscure who is held responsible for war crimes.”

Militaries around the world are investing in robotics and autonomous weapon systems. There are good reasons for this that range from saving human lives (both soldiers and civilians) to greater efficiency. But what happens when an autonomous system with a goal to improve the efficiency with which it kills, reaches a point where it is no longer controllable? Unlike nuclear weapons, the difficulty and cost to acquire weaponized AI once it has been developed will be low and will allow terrorists and other rogue parties to purchase such technology on black markets. These actors won’t show the relative restraint of nation-states. This is a very real problem and we’ll see more news about this well before we get to a world with ASI.

2. Various groups will race to develop the first ASI and use it to gain advantage and control.

Similar to the arms race mentioned above, nations, companies, and others will be competing to develop the first ASI because they know that the first one that is developed has a good chance of suppressing all others and being the only ASI ever to exist. In the opening chapter of his book Life 3.0 Max Tegmark lays out how a company that manages to build an ASI manages to take control over the world. The Tale of the Omega Team is worth the price of the book, in and of itself. It lays out all the advantages the “first mover” to AGI and then ASI gains and all of the rewards that come along with that. It will be hard to convince everyone on Earth to leave that fruit on the tree. This is one of the reasons I believe the move from ANI to AGI/ASI is inevitable. I hope (maybe naively) that it is some group with good intentions that wins this “last race”.

But these stories need not be relegated to the hypothetical. Russia and China are racing to develop ever more sophisticated systems as the West tries to create guardrails.

3. An ASI itself will turn malicious and seek to destroy us.

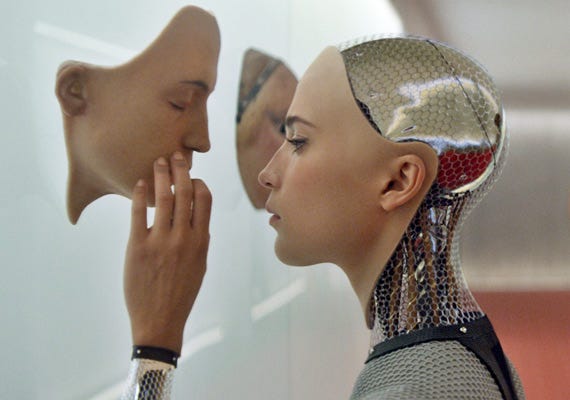

Unlike the scenarios above, this requires the AI to “want” to hurt humans of its own volition. Neither I, nor serious AI researchers are concerned with this scenario. AI won’t want to hurt us because they won’t want. They won’t have emotions and they won’t think like us. Anthropomorphizing² machines/programs in this way is not useful, and could actually be quite dangerous.

It will be very tempting to look upon these programs — that may indeed mimic human speech, comprehension, and emotions — and believe they are like us, that they feel like us and are motivated as we are. Unless we specifically attempt to create this in them, artificial intelligences will not be made in this image.

4. We don’t align the goals of the ASI with our own.

This is the scenario most AI safety researchers* spend most of their time on. The concept of aligning the goals of artificial intelligences with our own is important for a number of reasons. As we’ve already defined, intelligence is simply the ability to accomplish complex goals. Turns out machines are very good at this. You could say that it is what we built them for. But as machines get more complex, so does the task of goal setting.

* Yes, AI Safety is a thing that organizations like The Future of Life Institute and the Machine Intelligence Research Institute (MIRI) spend a lot of time studying.

One could make a compelling argument (if you’re a biologist) that evolutionary biology really only has one primary goal for all life — to pass on genetic instructions to offspring via reproduction. Stated more crudely, our main goal as humans is to engage in sex and have babies. But as you may have noticed, even though we spend a fair bit of energy on the matter, that isn’t the thing we do all the time. We have developed sub-goals like eating, finding shelter, and socializing that serve to help keep us alive long enough to get to the sexy times. Once we have kids, even though all we have to do is raise them until they, themselves are old enough to procreate, we still find other things with which to occupy ourselves. We go on hikes, surf, take photos, build with LEGOs, walk our dogs, go on trips, read books, and write blog posts — all of which don’t further our goal of passing on our genes.

We even do things to thwart our one job on planet Earth. Some of us use birth control or decide not to have children altogether. This flies in the face of our primary goal. Why do we do things like this? Let’s refer to Max Tegmark’s book Life 3.0 again:

Why do we sometimes choose to rebel against our genes and their replication goal? We rebel because by design, as agents of bounded rationality — we create/seek “rules of thumb”, we’re loyal only to our feelings. Although our brains evolved merely to help copy our genes, our brains couldn’t care less about this goal since we have no feelings related to genes…

In summary, a living organism is an agent of bounded rationality that doesn’t pursue a single goal, but instead follows rules of thumb for what to pursue and avoid. Our human minds perceive these evolved rules of thumb as feelings, which usually (and often without us being aware of it) guide our decision making toward the ultimate goal of replication.

[but] since our feelings implement merely rules of thumb that aren’t appropriate in all situations, human behavior strictly speaking doesn’t have a single well-defined goal at all.¹³

“The real risk with AGI isn’t malice but competence. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble.”

Every post about AI existential risk must mention Swedish philosopher Nick Bostrom’s “paperclip parable”.¹⁴ Sorta like a restatement of the genie or Midas problems, or—restated—be careful what you wish for.

But, say one day we create a super intelligence and we ask it to make as many paper clips as possible. Maybe we built it to run our paper-clip factory. If we were to think through what it would actually mean to configure the universe in a way that maximizes the number of paper clips that exist, you realize that such an AI would have incentives, instrumental reasons, to harm humans. Maybe it would want to get rid of humans, so we don’t switch it off, because then there would be fewer paper clips. Human bodies consist of a lot of atoms and they can be used to build more paper clips. If you plug into a super-intelligent machine with almost any goal you can imagine, most would be inconsistent with the survival and flourishing of the human civilization.”

— Nick Bostrom, Paperclip Maximizer at LessWrong

So what should we do about this? AI/Human goal alignment is likely to be a recurring theme here at AltText for a while so we will only touch on it now. Life 3.0 puts forth a pretty basic-sounding plan designed to make future AI safe, involving:

- Making AI learn our goals

- Making AI adopt our goals

- Making AI retain our goals

Sounds simple enough but it is actually incredibly difficult to define and carry out a plan like this. And we may only get one shot with it since the first ASI is likely to be the only one to ever be created.

This goal-alignment problem will be the deciding factor of whether we become immortal or extinct when artificial superintelligence becomes a reality.

Nuh-uh

When I tell people things like, they have bought their last car, or we’ll soon all only eat lab-grown meat, or we’ll colonize Mars in the next 20 years, or that they will be able to live on forever if they can hold out for another 30–40 years, I get polite nods, indignant scoffs, incredulous questions, or outright rejections. But these things will likely happen.

You may be sitting there — even having read this entire post — thinking this is all pretty ridiculous. You’re not going to concern yourself with some robot doomsday/fantasy scenario. Siri and Alexa can’t even answer simple questions or even understand what we ask of them half the time. It feels more right to be skeptical of my assertions of what will happen (and when). But is it right? If we use only logic and rationality and look at historical patterns, we should expect much, much more change to occur in the coming decades than we intuitively expect.

It is just so hard for us to think about all the things that superhuman intelligence will be able to enable. And it will be easy for it.

Tim Urban ascribes our skepticism to three main things:

When it comes to history, we think in straight lines. When we imagine the progress of the next 30 years, we look back to the progress of the previous 30 as an indicator of how much will likely happen.

The trajectory of very recent history often tells a distorted story. First, even a steep exponential curve seems linear when you only look at a tiny slice of it, the same way if you look at a little segment of a huge circle up close, it looks almost like a straight line. Second, exponential growth isn’t totally smooth and uniform. Kurzweil explains that progress happens in “S-curves”. (remember those?⁵)

Our own experience makes us stubborn old men about the future. We base our ideas about the world on our personal experience, and that experience has ingrained the rate of growth of the recent past in our heads as “the way things happen.” We’re also limited by our imagination, which takes our experience and uses it to conjure future predictions — but often, what we know simply doesn’t give us the tools to think accurately about the future.

Urban goes on to explain why isn’t everyone focused on this.carry out

…movies have really confused things by presenting unrealistic AI scenarios that make us feel like AI isn’t something to be taken seriously in general. James Barrat compares the situation to our reaction if the Centers for Disease Control issued a serious warning about vampires in our future.

Due to something called cognitive biases, we have a hard time believing something is real until we see proof. I’m sure computer scientists in 1988 were regularly talking about how big a deal the internet was likely to be, but people probably didn’t really think it was going to change their lives until it actually changed their lives. This is partially because computers just couldn’t do stuff like that in 1988, so people would look at their computer and think, “Really? That’s gonna be a life changing thing?” Their imaginations were limited to what their personal experience had taught them about what a computer was, which made it very hard to vividly picture what computers might become. The same thing is happening now with AI. We hear that it’s gonna be a big deal, but because it hasn’t happened yet, and because of our experience with the relatively impotent AI in our current world, we have a hard time really believing this is going to change our lives dramatically. And those biases are what experts are up against as they frantically try to get our attention through the noise of collective daily self-absorption.

Even if we did believe it — how many times today have you thought about the fact that you’ll spend most of the rest of eternity not existing? Not many, right? Even though it’s a far more intense fact than anything else you’re doing today? This is because our brains are normally focused on the little things in day-to-day life, no matter how crazy a long-term situation we’re a part of. It’s just how we’re wired.

Next Steps

If you’re inspired to learn more, I heartily encourage you. To get started, here are a few sources that have inspired me:

- This pair of 2015 blog posts by Tim Urban at Wait But Why: The AI Revolution: The Road to Superintelligence and The AI Revolution: Our Immortality or Extinction.

- Max Tegmark’s 2017 book: Life 3.0: Being Human in the Age of Artificial Intelligence

- Listen to the Sam Harris podcast The Future of Intelligence (with Max Tegmark) or watch his TED Talk titled Can we build AI without losing control over it?

- The “classic futurist’s bible” The Age of the Spiritual Machines by Ray Kurzweil

- Follow along with news of AI on The Future of Life Institute’s blog

- Learn more about AI Safety at OpenAI

Max Tegmark asks these 7 questions at the beginning of Chapter 5 in Life 3.0. I’d love to have these be some of the jumping-off points for conversations both here on this site in the comments and back in your own lives. What do you think?

- Do you want there to be superintelligence?

- Do you want humans to still exist, be replaced, cyborg-ized, and/or uploaded/simulated?

- Do you want humans or machines in control?

- Do you want AIs to be conscious or not?

- Do you want to maximize positive experiences, minimize suffering, or leave this to sort itself out?

- Do you want life spreading into the cosmos?

- Do you want a civilization striving toward a greater purpose that you sympathize with, or are you OK with future life forms that appear content even if you view their goals as pointlessly banal?

You can take a survey featuring some of these concepts (and more) and see the results on the Future of Life website. Just take care of the twelve aftermath scenarios. Example: I thought the Egalitarian Utopia sounded good but it isn’t clear just from the survey that it represents a repressing of any superintelligent AI development (to which I am opposed).

For my part, I am already planning on breaking this immense and important subject down further in future posts. If you have concepts, ideas, questions, etc, on things you would like to see me research and write about, feel free to mention it in the comments or reach out to me directly.

This is such an immense topic that I ended up digressing to explain things in greater detail or to provide additional examples and these bogged the post down. There are still some important things I wanted to share, so I have included those in a separate endnotes post.

Comments