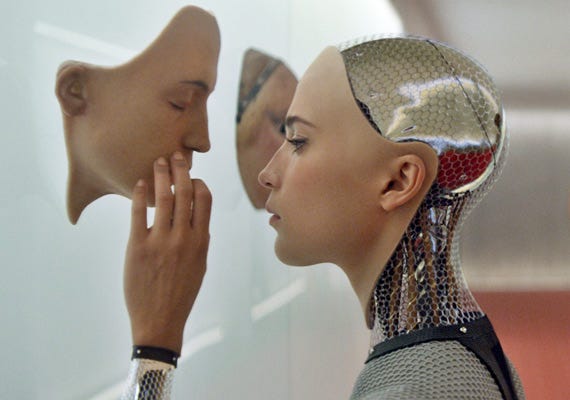

Earth has received a message from an alien intellect alerting us to their arrival in 30–50 years. There are promises of great advances and technologies — some of which we cannot even imagine. There is also the threat of being dominated, enslaved — or worse — annihilated.

This is not a hypothetical fiction or fantasy. This is the actual state of the world today. We are 30 to 50 years away from the arrival of an intelligence that will dwarf our own, by a magnitude greater than human intellect towers over that of ants. Many of us are even devoting our lives to welcoming these intelligent entities.

Editor’s note: Because this issue is so crucial to our lives, I’ve broken the original post down into these six topics—each in its own post to allow for easier reading.

This is a huge topic. That’s why the original post was comprised of more than 7,000 and why I have broken it out into five separate posts. Even so, it didn’t cover nearly every aspect of the importance of AI to our future. My hope is to motivate readers to think about this, learn more, and have many conversations with their families and friends.

I’ve broken the original post down into these six topics—each in their own post. Feel free to jump around.

- An introduction to the opportunities and threats we face as we near the realization of human-level artificial intelligence (this post)

- What do we mean by intelligence, artificial or otherwise?

- The how, when, and what of AI

- AI and the bright future ahead

- AI and the bleak, darkness that could befall us

- What should we be doing about AI anyway?

Our entire planet should unify in purpose, set aside pettiness, and work together. We should earnestly prepare for this arrival and all of the astonishing and terrifying knowledge and technologies it will bring. We should create a plan to endear ourselves to these strange new forces. We should try to pass our values to them and share our goals with them to stave off the myriad threats these entities will present while ushering in wondrous advances, prosperity, and even immortality.¹

“The first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.”

— Irving J. Good, 1965

Despite the fact that we are actively developing these new alien² intellects, we know nearly as little about these artificial intelligences as we would about an extraterrestrial visitor from another part of the cosmos. All we know for sure is that they are coming. The fun (and scary) part is speculating on what sorts of things they will bring with them.

Like you, I’ve been hearing about artificial intelligence (AI) for the better part of my life. And like you, I conjured up ideas of androids, robots, and a dystopian future. An emerging group of researchers are proselytizing, however, that we must not wait for the future to unfold like passive onlookers, but instead,is we need to actively work towards creating the outcomes we want to see.

I, for one, want to see a fair justice system, safe transportation where millions don’t die each year, cities and buildings designed to truly accommodate our needs, an intelligent use of natural resources and efficient production of energy, the restoration of our wild areas — including reviving some extinct creatures, once again increasing biodiversity and repairing the environmental damage we’ve done, ending scarcity and the requirement of work, bringing about a new renaissance of creativity and innovation unlike anything the world has ever seen, the elimination of all ailments and disease, the eradication of suffering, and — most epically — an end to death itself.

These outcomes will all be possible if we succeed in developing artificial superintelligence and, more importantly, align its goals with our own.

Nuh-uh

When I tell people things like, they have bought their last car, or we’ll soon all only eat lab-grown meat, or we’ll colonize Mars in the next 20 years, or that they will be able to live on forever if they can hold out for another 30–40 years, I get polite nods, indignant scoffs, incredulous questions, or outright rejections. But these things will likely happen.

You may be sitting there thinking this is all pretty ridiculous. You’re not going to concern yourself with some robot doomsday/fantasy scenario. Siri and Alexa can’t even answer simple questions or even understand what we ask of them half the time. It feels more right to be skeptical of my assertions of what will happen (and when). But is it right? If we use only logic and rationality and look at historical patterns, we should expect much, much more change to occur in the coming decades than we intuitively expect.

It is just so hard for us to think about all the things that superhuman intelligence will be able to enable. And it will be easy for it.

Tim Urban ascribes our skepticism to three main things:

When it comes to history, we think in straight lines. When we imagine the progress of the next 30 years, we look back to the progress of the previous 30 as an indicator of how much will likely happen.

The trajectory of very recent history often tells a distorted story. First, even a steep exponential curve seems linear when you only look at a tiny slice of it, the same way if you look at a little segment of a huge circle up close, it looks almost like a straight line. Second, exponential growth isn’t totally smooth and uniform. Kurzweil explains that progress happens in “S-curves”. (remember those?⁵)

Our own experience makes us stubborn old men about the future. We base our ideas about the world on our personal experience, and that experience has ingrained the rate of growth of the recent past in our heads as “the way things happen.” We’re also limited by our imagination, which takes our experience and uses it to conjure future predictions — but often, what we know simply doesn’t give us the tools to think accurately about the future.

Urban goes on to explain why isn’t everyone focused on this.

…movies have really confused things by presenting unrealistic AI scenarios that make us feel like AI isn’t something to be taken seriously in general. James Barrat compares the situation to our reaction if the Centers for Disease Control issued a serious warning about vampires in our future.

Due to something called cognitive biases, we have a hard time believing something is real until we see proof. I’m sure computer scientists in 1988 were regularly talking about how big a deal the internet was likely to be, but people probably didn’t really think it was going to change their lives until it actually changed their lives. This is partially because computers just couldn’t do stuff like that in 1988, so people would look at their computer and think, “Really? That’s gonna be a life changing thing?” Their imaginations were limited to what their personal experience had taught them about what a computer was, which made it very hard to vividly picture what computers might become. The same thing is happening now with AI. We hear that it’s gonna be a big deal, but because it hasn’t happened yet, and because of our experience with the relatively impotent AI in our current world, we have a hard time really believing this is going to change our lives dramatically. And those biases are what experts are up against as they frantically try to get our attention through the noise of collective daily self-absorption.

Even if we did believe it — how many times today have you thought about the fact that you’ll spend most of the rest of eternity not existing? Not many, right? Even though it’s a far more intense fact than anything else you’re doing today? This is because our brains are normally focused on the little things in day-to-day life, no matter how crazy a long-term situation we’re a part of. It’s just how we’re wired.

Other parts in this series:

Part Two: What do we mean by intelligence, artificial or otherwise?

Part Three: The how, when, and what of AI

Part Four AI and the bright future ahead

Part Five: AI and the bleak, darkness that could befall us

Part Six: What should we be doing about AI anyway?

This is such an immense topic that I ended up digressing to explain things in greater detail or to provide additional examples and these bogged the post down. There are still some important things I wanted to share, so I have included those in a separate endnotes post.

Comments