Editor’s note: Because this issue is so crucial to our lives, I’ve broken the original post down into these six topics—each in its own post to allow for easier reading.

This is a huge topic. That’s why the original post was comprised of more than 7,000 and why I have broken it out into five separate posts. Even so, it didn’t cover nearly every aspect of the importance of AI to our future. My hope is to motivate readers to think about this, learn more, and have many conversations with their families and friends.

I’ve broken the original post down into these six topics—each in their own post. Feel free to jump around.

- An introduction to the opportunities and threats we face as we near the realization of human-level artificial intelligence

- What do we mean by intelligence, artificial or otherwise?

- The how, when, and what of AI (this post)

- AI and the bright future ahead

- AI and the bleak, darkness that could befall us

- What should we be doing about AI anyway?

1. How probable is it that we’ll develop AI that is smarter than humans?

Of the three questions, this is the least fun but also, perhaps, the easiest to answer. At a recent conference comprised of the people most involved in working in the field of artificial intelligence, participants were asked when human-level intelligence would be achieved and less than 2% of the respondents believed the answer to be never. This poll asked specifically about AGI and not ASI, however, many people (including me) believe that the difficult task is developing a sufficiently advanced AI that can teach itself new things (AGI) and that a super-intelligent version will emerge quite quickly thereafter. Some think this emergence could take years or decades once we arrive at AGI. Others warn that it could be just a matter of hours after that first AGI is developed due to the speed of recursive self-improvement. It is this capacity for recursive self-improvement that many software engineers are indeed hoping to instill in their programs to speed development. It is also this ability that could very easily see our world go from no human-level AI’s to one to the first super-human AI very rapidly.

While there are still a few skeptics who believe we will never develop human-level intelligence or that it won’t be for hundreds of years, a growing consensus among those closest to these efforts⁴ believe it will happen, and soon…

2. When might we develop such an AI?

Here’s where we are right now:

We’re at this point in time, just before a massive spike in human progress. This has occurred before with the industrial revolution and the advent of the Internet but this spike will dwarf those. Due to the Law of Accelerating Returns — advancement as an evolutionary process that speeds up exponentially over time — life in 2040 — just a scant 23 years away — may be as different from today as life today is from medieval times. It may be so different that we can no more picture it than could a serf from the Middle Ages understand the technologies used to create a website or a cellphone.

When we consider the timeline and effects of superintelligent AI systems and overlay them on top of our expected lifetimes we see the enormous wondrous and scary impacts that we — you and I — can expect. Let’s check in on those same AI researchers for their expert opinions. A large majority (>68%) believed we would develop AGI by 2050 with 43% of respondents believing this would occur by 2030.

So in 13 to 33 years, there is believed to be a good chance that we will have developed a computer program that is at least as intelligent as us. This will include many different types of intelligence including the ability to learn on its own.

It seems hard to fathom it going this fast. It doesn’t feel like things will advance this quickly. If you’re like me, you read about new tech daily and buy the latest and greatest gadgets and gizmos. The pace of technological advancement is slooooow, right? But our perception is flawed.

“Advances are getting bigger and bigger and happening more and more quickly.” — Tim Urban

One of the most optimistic and vocal proponents of this new era being ushered in is Ray Kurzweil. He came up with and based many of his predictions on the aforementioned Law of Accelerating Returns and expects AGI to arrive by 2029. Again I turn to Wait But Why:

“The average rate of advancement between 1985 and 2015 was higher than the rate between 1955 and 1985 — because the former was a more advanced world — so much more change happened in the most recent 30 years than in the prior 30.”

Kurzweil “believes another 20th century’s worth of progress happened between 2000 and 2014 and that another 20th century’s worth of progress will happen by 2021, in only seven years. A couple decades later, he believes a 20th century’s worth of progress will happen multiple times in the same year, and even later, in less than one month.” And “all in all, that the 21st century will achieve 1,000 times the progress of the 20th century.”

This is due to the power of exponential growth versus the linear growth of which we often think.⁵

Probably closer to 2030 to 2040 imo. 2060 would be a linear extrapolation, but progress is exponential. https://t.co/e6gyOVcMZG

— Elon Musk (@elonmusk) June 6, 2017

3. What impact will such development have on humanity?

The remainder of this post will lay out what life could look like once we are no longer the most (generally) intelligent being on the planet. We’ll touch on both the good and bad outcomes (for humans) and describe some ways in which we could hope to nudge things toward the better outcomes.

It will likely be impossible to know exactly “what” and “how” these AIs are thinking. My guess is they will have advanced through a combination of directed programming and training from humans as well as recursive self-development efforts of their own. Once they can efficiently self-improve, we will quickly lose our ability to know what the hell is going on within them or comprehend what they are doing. It’s not just that an ASI will think a million⁶ times faster than us, it’s that the quality of the thinking will be that much better.

Here’s an example from Wait But Why that illustrates the gulf between human cognition and that of chimps.

“What makes humans so much more intellectually capable than chimps isn’t a difference in thinking speed — it’s that human brains contain a number of sophisticated cognitive modules that enable things like complex linguistic representations or long-term planning or abstract reasoning, that chimps’ brains do not. Speeding up a chimp’s brain by thousands of times wouldn’t bring him to our level — even with a decade’s time, he wouldn’t be able to figure out how to use a set of custom tools to assemble an intricate model, something a human could knock out in a few hours. There are worlds of human cognitive function a chimp will simply never be capable of, no matter how much time he spends trying.

But it’s not just that a chimp can’t do what we do, it’s that his brain is unable to grasp that those worlds even exist — a chimp can become familiar with what a human is and what a skyscraper is, but he’ll never be able to understand that the skyscraper was built by humans. In his world, anything that huge is part of nature, period, and not only is it beyond him to build a skyscraper, it’s beyond him to realize that anyone can build a skyscraper. That’s the result of a small difference in intelligence quality.

And in the scheme of the intelligence range we’re talking about today or even the much smaller range among biological creatures, the chimp-to-human quality intelligence gap is tiny.”

And it is tiny. We are pretty close in intellect to chimps — no offense. In fact, there are intellectual tasks that chimps can perform better than us.

Chimps can recognize and recall certain patterns far better than we can. Don’t be so full of yourself.

So let’s say we now imagine a superintelligent machine that represents a cognitive gap equal to the one between us and the chimp. But in this example, we’re the chimps and the machine is trying to explain things to us that we cannot comprehend. Even these small gaps in level present huge chasms in understanding.

Now let’s examine the much larger gap between us and ants. An ant building an anthill on the side of a highway has no idea what that highway is. They cannot possibly comprehend how we built it, for what we use it, what benefits it bestows upon us, or even who we are. Attempting to explain any of this to the ant is a preposterous idea for good reason. Even if we somehow could transmit our language or thoughts to the ants, they are simply not capable of comprehending any of it.

A machine with the sort of smarts we’re talking about would be about as far away from us as we are to ants. The gap would be huge. We could not comprehend what the machine was doing and it would be pointless for the machine to attempt to explain it to us. Tim Urban uses a staircase metaphor to explain this.

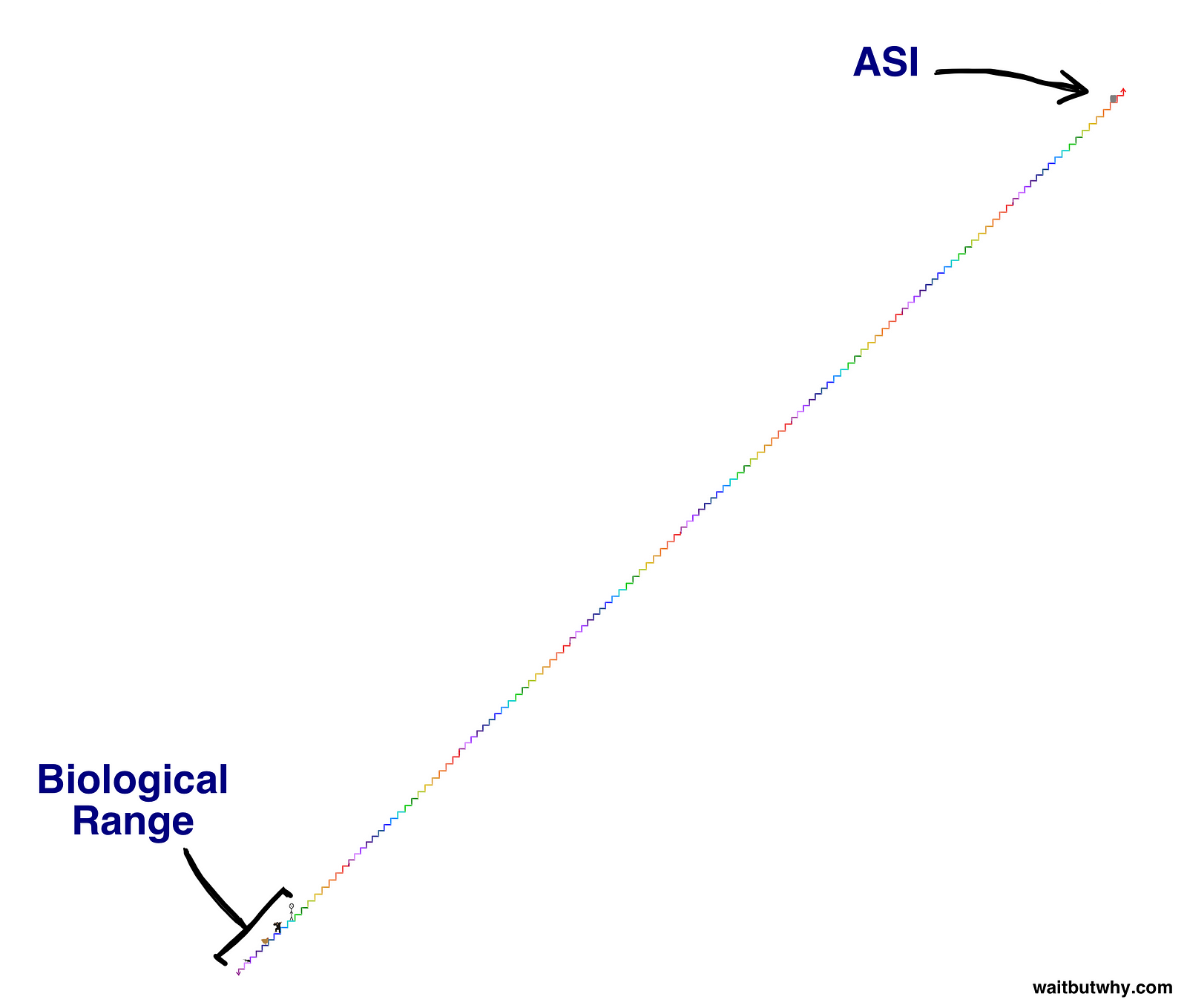

“In an intelligence explosion — where the smarter a machine gets, the quicker it’s able to increase its own intelligence, until it begins to soar upwards — a machine might take years to rise from the chimp step to the one above it, but perhaps only hours to jump up a step once it’s on the dark green step two above us, and by the time it’s ten steps above us, it might be jumping up in four-step leaps every second that goes by. Which is why we need to realize that it’s distinctly possible that very shortly after the big news story about the first machine reaching human-level AGI, we might be facing the reality of coexisting on the Earth with something that’s here on the staircase (or maybe a million times higher).”

The biological range of intelligence on the left, and an ASI well above that range on the right.

“And since we just established that it’s a hopeless activity to try to understand the power of a machine only two steps above us, let’s very concretely state once and for all that there is no way to know what ASI will do or what the consequences will be for us. Anyone who pretends otherwise doesn’t understand what superintelligence means.”

Ok, enough of making ourselves feel inferior. All of this is a way of stating that the sorts of things this uber-powerful, intelligent machine will be able to do, will be amazing. Let’s get into some of these things.

Other parts in this series:

Part One: An introduction to the opportunities and threats we face as we near the realization of human-level artificial intelligence

Part Two: What do we mean by intelligence, artificial or otherwise?

Part Four AI and the bright future ahead

Part Five: AI and the bleak, darkness that could befall us

Part Six: What should we be doing about AI anyway?

This is such an immense topic that I ended up digressing to explain things in greater detail or to provide additional examples and these bogged the post down. There are still some important things I wanted to share, so I have included those in a separate endnotes post.

Comments